Searches For ‘Taylor Swift’ On X Come Up Empty After Sexually Explicit Deepfakes Go Viral

File Photo by: zz/John Nacion/STAR

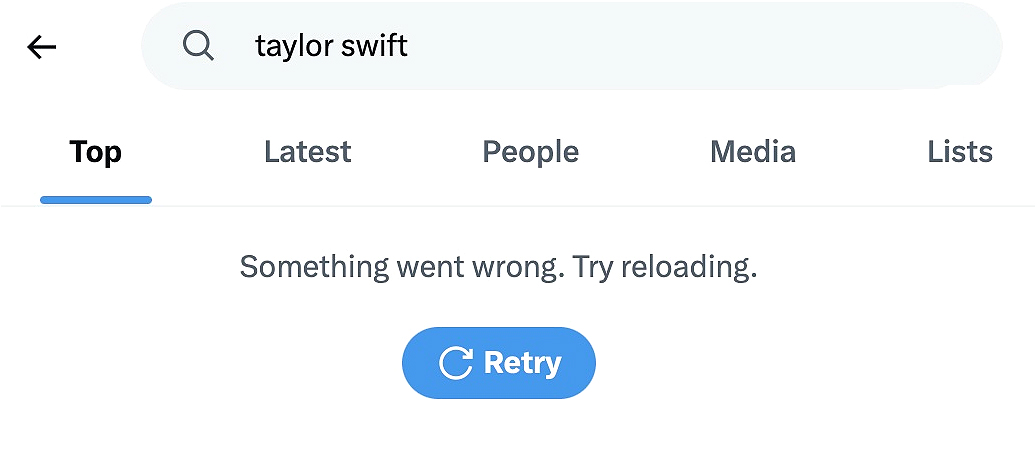

Searches for pop sensation Taylor Swift came up empty Saturday on X (formerly Twitter) just days after sexually explicit deepfakes went viral on the platform.

“Something went wrong. Try reloading,” appeared whenever the singer’s name was typed into the X search box.

According to NBC News, “This comes after deepfakes portraying Swift nude and in sexual scenarios were circulated on X Wednesday. The images can be created using artificial intelligence tools that develop new, fake images, or by taking a real photo and ‘undressing’ it.”

The X-rated altered photos were viewed more than 27 million times before the posting account was suspended, NBC reported.

The photos caused such an uproar that they became a topic of conversation in the White House Briefing Room.

Press Secretary Karine Jean-Pierre told ABC News Friday, “We are alarmed by the reports of the…circulation of images that you just laid out — of false images to be more exact, and it is alarming.

“While social media companies make their own independent decisions about content management, we believe they have an important role to play in enforcing their own rules to prevent the spread of misinformation, and non-consensual, intimate imagery of real people.”

ABC News added that “Deepfake pornography is often described as image-based sexual abuse.”

Some fans blamed X for “banning” Swift from the platform.

The White House acknowledges that the smexually explicit Taylor Swift AI images are ‘alarming’ and is urging Congress to take legislative action to address these AI images of Taylor.

And now “Taylor Swift” is banned from “X” search. Good job🙏

— 𝙎𝙝𝙖𝙣𝙠𝙮 🚩 (@shanktankk) January 27, 2024

📲| “Taylor Swift” is now banned from the Twitter search. pic.twitter.com/2dMDlyjJmp

— The Swift Society (@TheSwiftSociety) January 27, 2024

🚨🤯 Taylor Swift Has Been BANNED From X’s Search Engine‼️ pic.twitter.com/Vb8OrIo1io

— Censored Men (@CensoredMen) January 27, 2024

Taylor Swift” has been banned from “X” search, marking the first step towards safeguarding her. Let’s refrain from searching Taylor Swift AI photos now. The creator of such AI will face consequences. We stand with you, Tay #TaylorSwiftAl

#TaylorSwift#TaylorSwiftErasTourSG pic.twitter.com/mMS5D6mEsH— Ch Ali (@chali_007) January 27, 2024

NBC News reached out to X for comment.